At the International Supercomputing Conference (ISC) earlier this week, Intel committed to delivering a complete technological solution for ExaScale computing performance later in the decade.

At the International Supercomputing Conference (ISC) earlier this week, Intel committed to delivering a complete technological solution for ExaScale computing performance later in the decade.

The world's fastest supercomputer is the K Computer in Japan, built by Fujitsu, delivering over 8 PetaFLOP/s performance. It consumes almost 10 Megawatts of power and costs about $10 million a year just to operate. When the incomplete system is fully constructed, it will reach 10 PFLOP/s performance.

To get to ExaScale computing, we need to increase this performance by over 100 times. Clearly, that brings up some serious technological problems such as the energy consumption requirements. To put it bluntly, today's computing technology will not be able to deliver an ExaScale system with realistic costs.

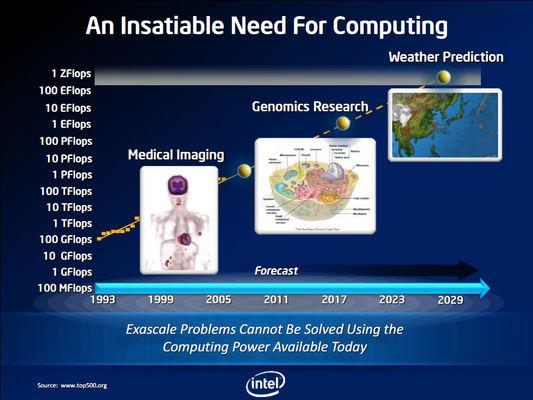

High-performance Computing (HPC) has never been more in demand as it is applied to more and more problems in the real world. ExaScale computing can deliver on goals in areas such as Healthcare, Climate and Weather Research and Energy technology.

An example given by Kirk Skaugen, Vice President, Intel Architecture Group; GM Data Center Group, is the number-crunching requirements of high quality CT scans, and how even pushing up the performance on the PetaScale level could provide real-time, high quality information for physicians.

At the ExaScale level, an entire functional cell could be modeled and studied. NASA even goes beyond this level and dreams up incredibly accurate Climate and Weather data from machines that would be even more powerful.

To get to ExaScale performance, another cost curve transformation is required. When Intel entered the server market in the 90s, the cost of a high-performance server (for the time) was roughly $58,000, and nowadays is less than $3,800. The amount of servers in the world has more than doubled since 2000, and Intel expects a doubling again in the next five years.

In terms of supercomputing, and to get an idea of the size of the change, you have to represent the cost as a fraction of the overall computation power in the system. So, back in 1997, in a TeraFLOP/s machine, the cost of a GigaFLOP/s of performance was around $55,000. In 2010, in a 500 TFLOP/s machine, the cost per GigaFLOP/s is less than $100, varying widely based on the project itself. A similar cost curve transformation is required for ExaScale computing.

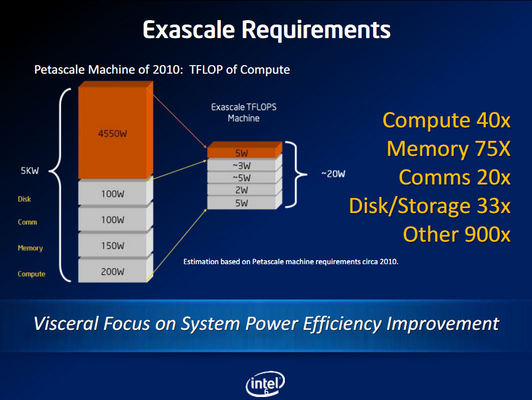

Intel's ExaScale commitment is to deliver systems with 100 times the performance of today's fastest, while only doubling the overall power consumption. If that doesn't sound hard, take into account that in a PetaScale machine, a TeraFLOP/s of compute requires around 5 kilowatts of power. To deliver on its goal, Intel needs to drop that requirement of a TeraFLOP/s in an ExaScale machine to around 20 Watts.

The ExaScale machine itself would use a combined 20 MegaWatts of power in full operation.

Another issue is the programmability of ExaScale machines. Instead of relying on new programming tools and methods, Intel wants to scale today's software programming model, so that essentially the same source code can be run through a next generation compiler and be scaled to systems that will feature more Xeon cores per socket, for example, or Intel's next-generation Many Integrated Core (MIC) architecture.

Intel wants the industry to avoid "costly detours" down new proprietary paths.

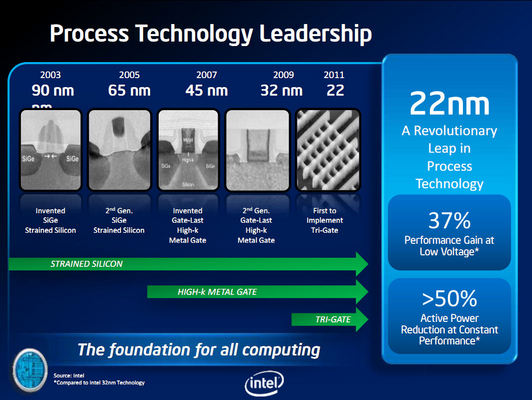

On the silicon side, Intel announced earlier this year that it had created a new 3D "Tri-Gate" transistor that would power a whole new generation of microprocessors and allow Intel to continue aggressively pursuing Moore's Law. Since 2003, we have seen the Process Technology improve (shrink) in the 2D vector from 90 nanometers (nm), to 65 nm, 45nm, and 32nm and now with the Tri-Gate transistor, to 22 nm and soon futher to 14 nm (basically keeping Intel's goal of producing new Process Technology every other year.)

The first generation of 22 nm technology have provided another leap in process technology, with a 37 percent performance gain at continued low voltages and 50 percent active power reduction at constant performance.

Intel's Many Integrated Core (MIC) microarchitecture, resulting from its failed Larrabee GPU project, is build on the 22 nm process and will launch with (up to) 50 cores to provide outstanding High Performance Computing.

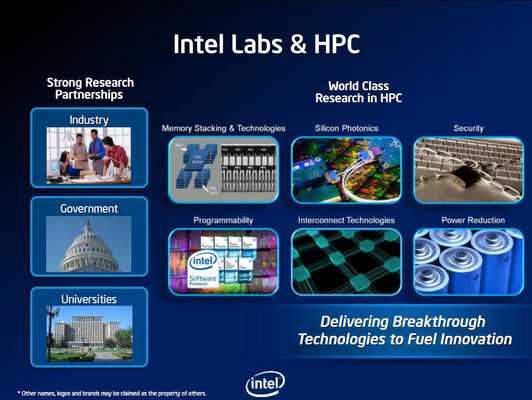

To get to ExaScale computing, there will need to be cooperation among industry bodies, academia and world government's to overcome the challenges that are faced. Intel already has formed three laboratories in Europe that deal with ExaScale challenges, with more likely to be formed. The combined efforts will need to provide solutions for better memory stacking technologies, silicon photonics solutions, improved security, parallel programming advancements, interconnect technologies and power reduction requirements.

The performance of the TOP500 #1 system is estimated to reach 100 PetaFLOP/s in 2015 and break the barrier of 1 ExaFLOP/s in 2018. By the end of the decade the fastest system on Earth is forecasted to be able to provide performance of more than 4 ExaFLOP/s.

If I'm still here then, I'll enjoy what Intel and everyone else has in store to reach ZettaFLOP/s by 2029.

To get to ExaScale computing, we need to increase this performance by over 100 times. Clearly, that brings up some serious technological problems such as the energy consumption requirements. To put it bluntly, today's computing technology will not be able to deliver an ExaScale system with realistic costs.

High-performance Computing (HPC) has never been more in demand as it is applied to more and more problems in the real world. ExaScale computing can deliver on goals in areas such as Healthcare, Climate and Weather Research and Energy technology.

Why ExaScale? And How?

An example given by Kirk Skaugen, Vice President, Intel Architecture Group; GM Data Center Group, is the number-crunching requirements of high quality CT scans, and how even pushing up the performance on the PetaScale level could provide real-time, high quality information for physicians.

At the ExaScale level, an entire functional cell could be modeled and studied. NASA even goes beyond this level and dreams up incredibly accurate Climate and Weather data from machines that would be even more powerful.

To get to ExaScale performance, another cost curve transformation is required. When Intel entered the server market in the 90s, the cost of a high-performance server (for the time) was roughly $58,000, and nowadays is less than $3,800. The amount of servers in the world has more than doubled since 2000, and Intel expects a doubling again in the next five years.

In terms of supercomputing, and to get an idea of the size of the change, you have to represent the cost as a fraction of the overall computation power in the system. So, back in 1997, in a TeraFLOP/s machine, the cost of a GigaFLOP/s of performance was around $55,000. In 2010, in a 500 TFLOP/s machine, the cost per GigaFLOP/s is less than $100, varying widely based on the project itself. A similar cost curve transformation is required for ExaScale computing.

The Power Requirements & Programmability.

Intel's ExaScale commitment is to deliver systems with 100 times the performance of today's fastest, while only doubling the overall power consumption. If that doesn't sound hard, take into account that in a PetaScale machine, a TeraFLOP/s of compute requires around 5 kilowatts of power. To deliver on its goal, Intel needs to drop that requirement of a TeraFLOP/s in an ExaScale machine to around 20 Watts.

The ExaScale machine itself would use a combined 20 MegaWatts of power in full operation.

Another issue is the programmability of ExaScale machines. Instead of relying on new programming tools and methods, Intel wants to scale today's software programming model, so that essentially the same source code can be run through a next generation compiler and be scaled to systems that will feature more Xeon cores per socket, for example, or Intel's next-generation Many Integrated Core (MIC) architecture.

Intel wants the industry to avoid "costly detours" down new proprietary paths.

Process Technology, Intel Many Integrated Core (MIC) architecture

On the silicon side, Intel announced earlier this year that it had created a new 3D "Tri-Gate" transistor that would power a whole new generation of microprocessors and allow Intel to continue aggressively pursuing Moore's Law. Since 2003, we have seen the Process Technology improve (shrink) in the 2D vector from 90 nanometers (nm), to 65 nm, 45nm, and 32nm and now with the Tri-Gate transistor, to 22 nm and soon futher to 14 nm (basically keeping Intel's goal of producing new Process Technology every other year.)

The first generation of 22 nm technology have provided another leap in process technology, with a 37 percent performance gain at continued low voltages and 50 percent active power reduction at constant performance.

Intel's Many Integrated Core (MIC) microarchitecture, resulting from its failed Larrabee GPU project, is build on the 22 nm process and will launch with (up to) 50 cores to provide outstanding High Performance Computing.

The Global Effort

To get to ExaScale computing, there will need to be cooperation among industry bodies, academia and world government's to overcome the challenges that are faced. Intel already has formed three laboratories in Europe that deal with ExaScale challenges, with more likely to be formed. The combined efforts will need to provide solutions for better memory stacking technologies, silicon photonics solutions, improved security, parallel programming advancements, interconnect technologies and power reduction requirements.

The performance of the TOP500 #1 system is estimated to reach 100 PetaFLOP/s in 2015 and break the barrier of 1 ExaFLOP/s in 2018. By the end of the decade the fastest system on Earth is forecasted to be able to provide performance of more than 4 ExaFLOP/s.

If I'm still here then, I'll enjoy what Intel and everyone else has in store to reach ZettaFLOP/s by 2029.