Researchers at North Carolina State University have found a way to improve CPU performance more than 20 percent using a GPU built on the same processor die.

Researchers at North Carolina State University have found a way to improve CPU performance more than 20 percent using a GPU built on the same processor die.

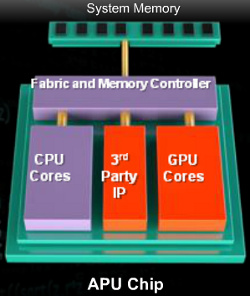

The research was performed in conjunction with AMD, who talked about plans to increase CPU/GPU integration in a presentation to analysts last week. Based on that presentation, the techniques identified in this research could be used in AMD processors within the next two years.

Although this research appears to be focused on current PC technology, most likely AMD's Fusion APU, it also has obvious applications for improving ARM processor performance. ARM's SOC (System On a Chip) design emphasizes power efficiency over speed, making it the standard choice for smartphones, tablets, and other mobile devices.

Along with a plan to transition into SOC processor production, possibly including ARM chips, AMD is promoting standardization between different processor architectures. Their HSA, or Heterogeneous Systems Architecture, initiative is intended to standardize the way various components integrated on a single processor interact with each other.